背景

网上似乎有提示词的说明,但是很少有输出格式的说明

本文记录一下格式,不管用什么跑其实输出格式都是一样的

命令来自deepseek-ocr

测试用例图片为

给排版定位坐标

ollama run deepseek-ocr "/path/to/image\n<|grounding|>Given the layout of the image."<|ref|>title<|/ref|><|det|>[[244, 140, 752, 162]]<|/det|>

<|ref|>text<|/ref|><|det|>[[182, 187, 816, 258]]<|/det|>

<|ref|>title<|/ref|><|det|>[[468, 294, 528, 308]]<|/det|>

<|ref|>text<|/ref|><|det|>[[182, 315, 816, 480]]<|/det|>

<|ref|>image<|/ref|><|det|>[[161, 507, 840, 716]]<|/det|>

<|ref|>image_caption<|/ref|><|det|>[[143, 735, 855, 829]]<|/det|>自由识别文字

ollama run deepseek-ocr "/path/to/image\nFree OCR."输出几乎纯文本(部分markdown)

End-to-End Test-Time Training for Long Context

Arnuv Tandon\*1,3, Karan Dalal\*1,4, Xinhao Li\*5, Daniel Koceja\*3, Marcel Rød\*3, Sam Buchanan4, Xiaolong Wang5, Jure Leskovec3, Sanmi Koyejo3, Tatsunori

Hashimoto3, Carlos Guestrin3, Jed McCaleb1, Yejin Choi2, Yu Sun\*2,3

1 Astera Institute 2 NVIDIA 3 Stanford University 4 UC Berkeley 5 UC San Diego

Abstract

We formulate long-context language modeling as a problem in continual learning rather than architecture design. Under this formulation, we only use a standard

architecture – a Transformer with sliding-window attention. However, our model continues learning at test time via next-token prediction on the given context,

compressing the context it reads into its weights. In addition, we improve the model’s initialization for learning at test time via meta-learning at training time.

Overall, our method, a form of Test-Time Training (TTT), is End-to-End (E2E) both at test time (via next-token prediction) and training time (via meta-learning), in

contrast to previous forms. We conduct extensive experiments with a focus on scaling properties. In particular, for 3B models trained with 164B tokens, our method

(TTT-E2E) scales with context length in the same way as Transformer with full attention, while others, such as Mamba 2 and Gated DeltaNet, do not. However, similar

to RNNs, TTT-E2E has constant inference latency regardless of context length, making it 2.7× faster than full attention for 128K context. Our code is publicly

available.

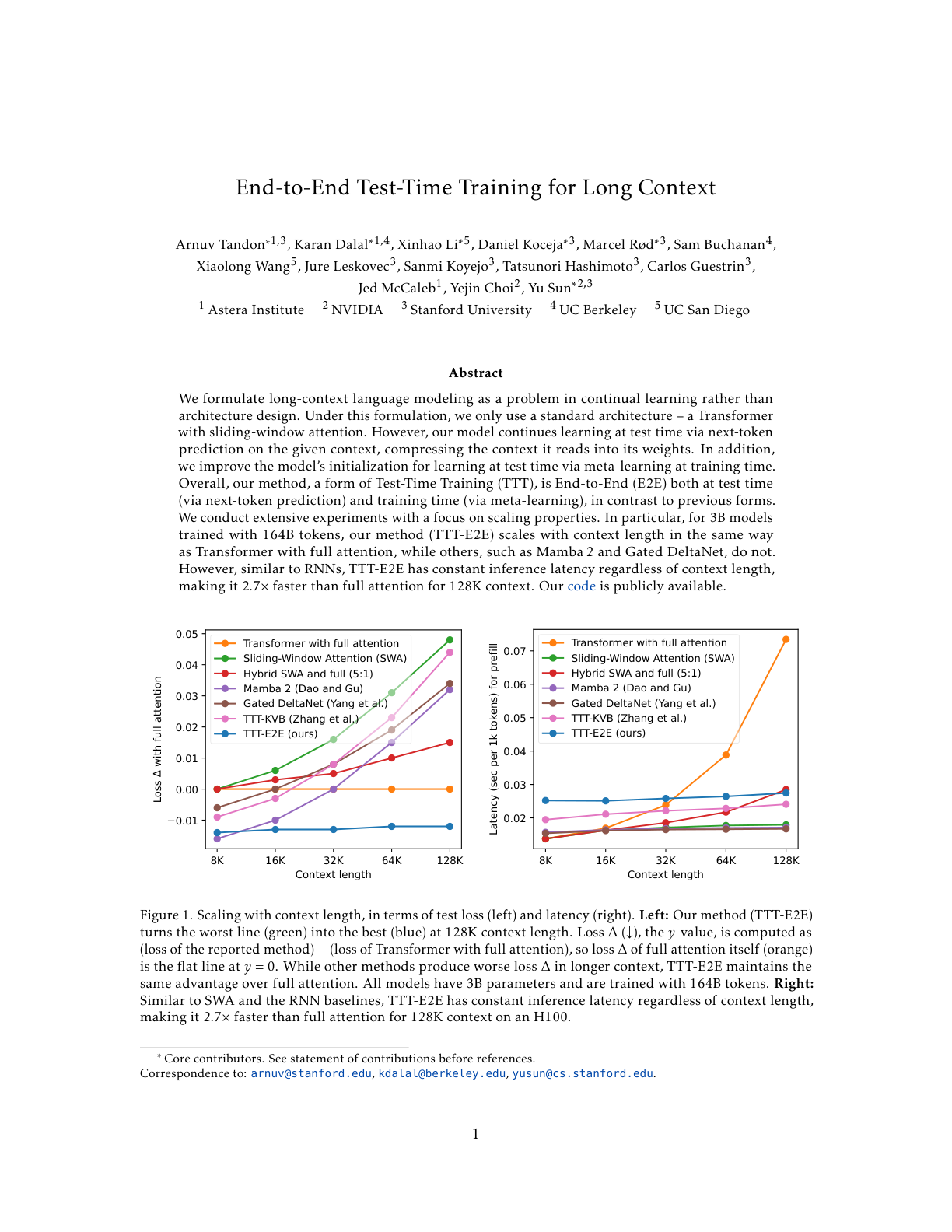

Figure 1. Scaling with context length, in terms of test loss (left) and latency (right). **Left:** Our method (TTT-E2E) turns the worst line (green) into the best

(blue) at 128K context length. Loss $\Delta$ (↓), the $y$-value, is computed as (loss of the reported method) – (loss of Transformer with full attention), so loss

$\Delta$ of full attention itself (orange) is the flat line at $y = 0$. While other methods produce worse loss $\Delta$ in longer context, TTT-E2E maintains the same

advantage over full attention. All models have 3B parameters and are trained with 164B tokens. **Right:** Similar to SWA and the RNN baselines, TTT-E2E has constant

inference latency regardless of context length, making it 2.7× faster than full attention for 128K context on an H100.

\* Core contributors. See statement of contributions before references.

Correspondence to: arnuv@stanford.edu, kdalal@berkeley.edu, yusun@cs.stanford.edu.定位图像?

和命令1似乎输出一样

ollama run deepseek-ocr "/path/to/image\nParse the figure."<|ref|>title<|/ref|><|det|>[[244, 140, 752, 161]]<|/det|>

<|ref|>text<|/ref|><|det|>[[182, 189, 816, 258]]<|/det|>

<|ref|>title<|/ref|><|det|>[[468, 295, 528, 307]]<|/det|>

<|ref|>text<|/ref|><|det|>[[184, 316, 814, 480]]<|/det|>

<|ref|>image<|/ref|><|det|>[[161, 507, 840, 716]]<|/det|>

<|ref|>image_caption<|/ref|><|det|>[[143, 735, 855, 829]]<|/det|>提取纯文本

ollama run deepseek-ocr "/path/to/image\nExtract the text in the image."忽略图像输出文本

End-to-End Test-Time Training for Long Context

Arnuv Tandon\*1,3, Karan Dalal\*1,4, Xinhao Li\*5, Daniel Koceja\*3, Marcel Rød\*3, Sam Buchanan4, Xiaolong Wang5, Jure Leskovec3, Sanmi Koyejo3, Tatsunori

Hashimoto3, Carlos Guestrin3, Jed McCaleb1, Yejin Choi2, Yu Sun\*2,3

1 Astera Institute 2 NVIDIA 3 Stanford University 4 UC Berkeley 5 UC San Diego

Abstract

We formulate long-context language modeling as a problem in continual learning rather than architecture design. Under this formulation, we only use a standard

architecture – a Transformer with sliding-window attention. However, our model continues learning at test time via next-token prediction on the given context,

compressing the context it reads into its weights. In addition, we improve the model’s initialization for learning at test time via meta-learning at training time.

Overall, our method, a form of Test-Time Training (TTT), is End-to-End (E2E) both at test time (via next-token prediction) and training time (via meta-learning), in

contrast to previous forms. We conduct extensive experiments with a focus on scaling properties. In particular, for 3B models trained with 164B tokens, our method

(TTT-E2E) scales with context length in the same way as Transformer with full attention, while others, such as Mamba 2 and Gated DeltaNet, do not. However, similar

to RNNs, TTT-E2E has constant inference latency regardless of context length, making it 2.7× faster than full attention for 128K context. Our code is publicly

available.

Figure 1. Scaling with context length, in terms of test loss (left) and latency (right). **Left:** Our method (TTT-E2E) turns the worst line (green) into the best

(blue) at 128K context length. Loss \(\Delta\) (\(\downarrow\)), the \(y\)-value, is computed as (loss of the reported method) – (loss of Transformer with full

attention), so loss \(\Delta\) of full attention itself (orange) is the flat line at \(y = 0\). While other methods produce worse loss \(\Delta\) in longer context,

TTT-E2E maintains the same advantage over full attention. All models have 3B parameters and are trained with 164B tokens. **Right:** Similar to SWA and the RNN

baselines, TTT-E2E has constant inference latency regardless of context length, making it 2.7× faster than full attention for 128K context on an H100.

* Core contributors. See statement of contributions before references.

Correspondence to: arnuv@stanford.edu, kdalal@berkeley.edu, yusun@cs.stanford.edu.提取为markdown

ollama run deepseek-ocr "/path/to/image\n<|grounding|>Convert the document to markdown."输出最全,包括定位符号和定位符号对应的文本

<|ref|>title<|/ref|><|det|>[[243, 138, 752, 161]]<|/det|>

# End-to-End Test-Time Training for Long Context

<|ref|>text<|/ref|><|det|>[[181, 186, 816, 258]]<|/det|>

Arnuv Tandon \(^{*,1,3}\) , Karan Dalal \(^{*,1,4}\) , Xinhao Li \(^{*,5}\) , Daniel Koceja \(^{*,3}\) , Marcel Rod \(^{*,3}\) , Sam Buchanan \(^{4}\) , Xiaolong

Wang \(^{5}\) , Jure Leskovec \(^{3}\) , Sanmi Koyejo \(^{3}\) , Tatsunori Hashimoto \(^{3}\) , Carlos Guestrin \(^{3}\) , Jed McCaleb \(^{1}\) , Yejin Choi \(^{2}\)

, Yu Sun \(^{*,2,3}\) \(^{1}\) Astera Institute \(^{2}\) NVIDIA \(^{3}\) Stanford University \(^{4}\) UC Berkeley \(^{5}\) UC San Diego

<|ref|>sub_title<|/ref|><|det|>[[468, 294, 527, 308]]<|/det|>

## Abstract

<|ref|>text<|/ref|><|det|>[[183, 314, 814, 480]]<|/det|>

We formulate long- context language modeling as a problem in continual learning rather than architecture design. Under this formulation, we only use a standard

architecture - a Transformer with sliding- window attention. However, our model continues learning at test time via next- token prediction on the given context,

compressing the context it reads into its weights. In addition, we improve the model's initialization for learning at test time via meta- learning at training time.

Overall, our method, a form of Test- Time Training (TTT), is End- to- End (E2E) both at test time (via next- token prediction) and training time (via meta-

learning), in contrast to previous forms. We conduct extensive experiments with a focus on scaling properties. In particular, for 3B models trained with 164B tokens,

our method (TTT- E2E) scales with context length in the same way as Transformer with full attention, while others, such as Mamba 2 and Gated DeltaNet, do not.

However, similar to RNNs, TTT- E2E has constant inference latency regardless of context length, making it \(2.7 \times\) faster than full attention for 128K context.

Our code is publicly available.

<|ref|>image<|/ref|><|det|>[[159, 506, 840, 716]]<|/det|>

<|ref|>image_caption<|/ref|><|det|>[[143, 734, 855, 830]]<|/det|>

<center>Figure 1. Scaling with context length, in terms of test loss (left) and latency (right). Left: Our method (TTT-E2E) turns the worst line (green) into the

best (blue) at 128K context length. Loss \(\Delta (\downarrow)\) , the \(y\) -value, is computed as (loss of the reported method) – (loss of Transformer with full

attention), so loss \(\Delta\) of full attention itself (orange) is the flat line at \(y = 0\) . While other methods produce worse loss \(\Delta\) in longer context,

TTT-E2E maintains the same advantage over full attention. All models have 3B parameters and are trained with 164B tokens. Right: Similar to SWA and the RNN

baselines, TTT-E2E has constant inference latency regardless of context length, making it \(2.7 \times\) faster than full attention for 128K context on an H100.

</center>